Blog

Exploring the Dark Side of Generative AI: The Rise of Cyber Threats in the Digital Age

When we think about predicting cyber threats that will reign in the coming year, we often lean back to the past year, learn from that, and see the potential evolution of these threats in the future. However, at times, we may experience technological breakthroughs that (unintentionally) significantly impact the evolution of cyber threats. In 2023, […]

When we think about predicting cyber threats that will reign in the coming year, we often lean back to the past year, learn from that, and see the potential evolution of these threats in the future. However, at times, we may experience technological breakthroughs that (unintentionally) significantly impact the evolution of cyber threats.

In 2023, we saw the rise of generative AI, which consumed the digital world within months. Not a single major product does not flaunt the use of ‘AI’ in its feature list. People started to rely more on ChatGPT (and the like) than on Google searches. It is clear that generative AI represents a major breakthrough in tech, which does not go unnoticed in cybercrime.

If we are to summarise some of the top advantages of generative AI, the list will look something like this:

- Efficiency and speed

- Scalability

- Cost Reduction

Now if we are to adapt a cyber criminals mindset – would you consider adapting such capabilities into your operations? You’d be foolish not to. And that is in fact the truth, however, in this article we will try to test the actual applicability of generative AI in its malicious use cases.

Using Deepfakes for Social Engineering

Let’s start with the most obvious one. Deepfakes already existed in the “pre-genAI era” but recently evolved into a much more sophisticated social engineering toolkit simply due to the three major promises of using GenAI that we mentioned in the introduction.

Malicious entities increasingly utilize deepfake technology to mimic company leaders and political figures in sophisticated social engineering schemes. By harnessing easily accessible media such as videos, interviews, and photographs, these perpetrators can convincingly replicate the identities of crucial figures, integrating deepfake audio and visual content into conference calls or through VOIP systems. Recent incidents reveal that these actors have succeeded in mimicking several individuals simultaneously, thereby lending credibility to their deceptive efforts. The availability of public footage and sound bites featuring company executives now poses a significant security risk, potentially being exploited in harmful social engineering plots.

Organizations face severe financial and reputational damage from deepfake-driven scams, particularly when executed by those seeking monetary gain. A notable incident in January 2024 involved using deepfakes to impersonate a Chief Financial Officer and other top executives during a video call, deceiving an employee into transferring $25.6 million. Furthermore, state-backed entities are believed to be using deepfakes to gather political intelligence and conduct disinformation campaigns. For example, in June 2022, The Guardian disclosed an attempt where deepfakes were used to dupe European mayors during a call by pretending to be Kyiv’s mayor, Vitali Klitschko.

To counter the misuse of deepfake technology, many companies offering commercial voice cloning and text-to-speech services have introduced consent requirements. However, despite these precautions, malicious actors continue to develop methods to circumvent these barriers.

But there is a major drawback when it comes to generating convincing video deepfakes: latency

Based on several researches (and validated by our own), these kind of latency exist:

- Latency with Text-to-Speech conversion

- Latency introduced due to utilizing deepfake video generation on a Live call.

Finally, we would also mention that there is still quite a significant need of human intervention for acquiring and pre-processing audio and video clips, to train the ML models appropriately.

However, even if these drawbacks are realistic and true today, we believe this will soon change. There is a notable advancement in open source contribution to LLMs. Moreover, multi-billion dollar industries are looking to adapt the legitimate use cases of such technology in their product – notably, we see the video gaming industry and film studios invest a lot in the evolution of AI-generated content to push the boundaries of realism in video games. Such investment always speeds up the evolution of tech, however, most of the time without enough consideration of its security.

Our analysis was based on the Tortoise TTS tool, which is an open-source voice imitation tool.

Adaptive AI-generated Malware

Probably the most lucrative use case of them all, regarding cyber threats, is the capability of developing a malicious code by purely relying on AI.

The development of malware and exploits through generative AI is a field likely being explored by cybercriminals, as noted in a January 2024 report by the UK’s National Cyber Security Centre (NCSC). Such endeavors are expected to be the domain of highly resourced groups possessing access to exclusive, high-grade malware and exploit data. The report suggests that groups with fewer resources might find it challenging to craft effective malware and exploits using generative AI trained on publicly available datasets.

YARA rules, published by security experts for identifying and categorizing malware via specific code patterns, present a paradox. While meant to bolster defense mechanisms, these rules also inadvertently furnish hackers with valuable knowledge on how to tweak their malware to slip past detection systems. Yet, altering malware to dodge these detection measures typically demands considerable effort and resources, acting as a deterrent to attackers.

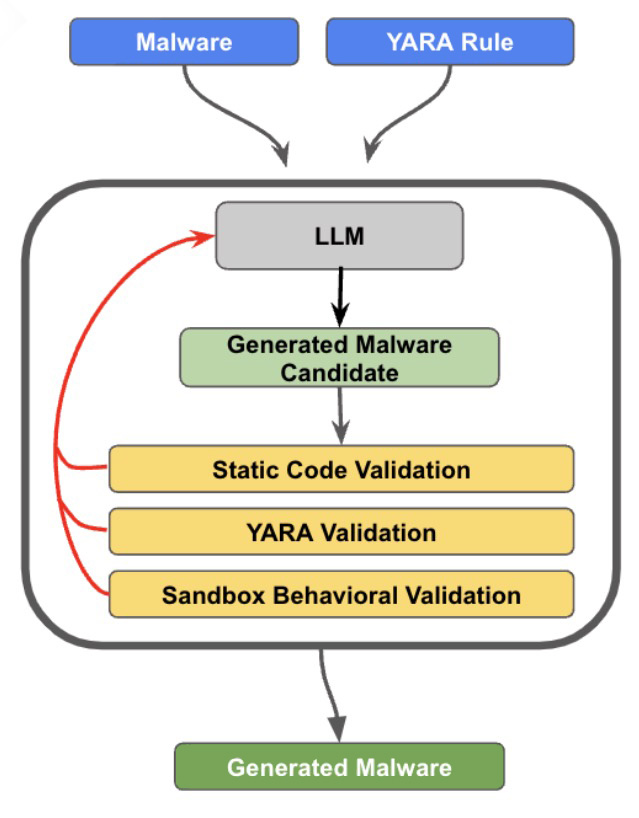

In an initiative to circumvent YARA rule detection, a project was undertaken by Insikt Group from Recorded Future involving the modification of malware source code through generative AI. The test involved STEELHOOK, a PowerShell tool employed by APT28 for extracting browser data from Google Chrome and Microsoft Edge. The process entailed submitting STEELHOOK’s code alongside pertinent YARA rules to a language model, which was then tasked with altering the code to evade detection. The revised malware underwent several checks to confirm its syntax accuracy, its undetectability by YARA, and the retention of its initial functionality. The feedback from these tests was used to refine the AI’s output.

This iterative process enabled researchers to evade simple YARA rule detection. Nonetheless, the capabilities of current AI models are limited by the scope of the context they can analyze at once, restricting tests to simpler scripts and malware variants. Additionally, these models struggle to fulfill the triad of prerequisites: error-free syntax, evading YARA detection, and maintaining original functionality. The study did not extend to intricate YARA detection strategies, such as pattern matching, which would necessitate additional preparatory steps and validations.

This exploration into AI-facilitated malware adaptation underscores both the possibilities and limitations of using AI to bypass conventional detection frameworks like YARA rules. While AI can assist in evading certain detection methods, it also highlights the need for more sophisticated detection techniques resistant to such evasion attempts. Nonetheless, the current state of AI technology requires significant refinements to generate error-free and functional malware code consistently, pointing to the necessity for comprehensive testing and feedback mechanisms.

Impersonating Brands

It’s increasingly apparent that malicious entities leverage generative AI to influence operations. Cutting-edge language models and image generators enable these actors to expand their reach, create content in multiple languages, and tailor messages for specific audiences.

On December 5, 2023, a report from Insikt Group researchers unveiled “Doppelgänger,” an operation with ties to Russia focused on manipulating public opinion. This network, utilizing counterfeit news platforms, deceptive tactics, and social media to spread its message, aims to disrupt military support for Ukraine and incite political divisions in the United States, France, Germany, and Ukraine. It was noted for employing a modest amount of content that seemed to be produced by AI.

The challenge of reliably detecting AI-created text persists among experts, partly due to the absence of a unified method for assessing whether content used in these campaigns is AI-generated. To gauge the true threat of AI in mass-producing manipulative content, an initiative was launched to mimic authentic Russian and Chinese news sites by incorporating AI-generated articles and selecting images tailored to the accompanying text.

Researchers managed to craft content specifically designed to appeal to certain audiences, utilizing advanced techniques to align the material with their political leanings. The system utilized not only takes a set influence goal or authentic articles for context but also applies generative AI for cloning legitimate news site designs, thus facilitating a surge in fake content production. The team employed a versatile AI model that was accessible to the public for choosing images that complement the AI-written articles.

Moreover, our SOC team detects many impersonation attempts of banking websites, which are improving the success rate of phishing campaigns targeting banks’ customers. The sophistication of using AI-generated content instead of trying to copy the HTML / CSS stylings showcases a more convincing fake website that also introduces correct pathing, text translations, and logo abuse.

This method not only boosts the credibility of the disinformation but also significantly reduces costs compared to traditional content creation methods. For example, generating website content and articles could cost less than $0.01 each, making AI an economical option compared to employing content writers, even from regions with lower labor costs.

This strategy not only cuts costs drastically but also has the potential to increase ad revenue for these disinformation sites, turning them into profitable operations.

Mitigations

- The digital personas of executives, including their voices and visual representations, have become a significant vulnerability for corporations, necessitating a re-evaluation of security strategies against impersonation in targeted schemes. To mitigate risks associated with large transactions and critical operations, companies should adopt various secure communication and verification methods beyond just conference calls and VOIP, like encrypted instant messaging or email services.

- Especially for entities in the media and public sectors, monitoring the unauthorized use of their brand or content in misinformation campaigns is crucial. Our clients that leverage our Brand Protection Service have the advantage of keeping tabs on new domain registrations and digital content that misappropriates their brand.

- To guard against the possibility of cyber adversaries crafting AI-powered polymorphic malware, organizations must bolster their defenses with layered and behavior-based detection systems. Tools such as Sigma, Snort, and sophisticated YARA rules are expected to continue effectively identifying malware threats well into the future. Our SOC team constantly adapts its detection toolkit based on the latest Threat Intelligence and evolving technology.

- It is critical for sectors deemed essential — like defense, government, energy, manufacturing, and transport — to carefully manage and cleanse publicly available images and footage of sensitive assets and locations to prevent exploitation by hostile parties.

Closing Thoughts

While researching the current state of generative AI and its potential for malicious use, we anticipated the functionality such tools could provide but were still impressed by the capabilities that are already possible.

However, more advanced use cases, such as AI-generated exploits and malware, will likely need some more time for reliable production on a mass level. Sophisticated threat actors will probably take the lead here since they can afford to invest more in such research and development. Once the cost reduction occurs due to the advancement of technology and research, we can expect more AI-generated malware to occupy the cyber threat landscape, potentially already by the end of 2024.

AI-generated content, such as videos and images, is our next biggest threat. It will directly contribute to the success rate of social engineering attacks, which we anticipate will increase in 2024 with leveraging deepfakes. However, there will be a massive distinction between sophisticated and convincing deepfakes compared to those with limited investment in generating such content. As we mentioned, convincing deepfakes will require a lot of human intervention at the current state – with certain required skill sets. This will be the main limiting factor for casual hackers.

While generative AI will undoubtedly shape the new digital world in many ways, we must not neglect the impact of its adversarial use. Technological advancement must go hand-in-hand with security during the development lifecycle to ensure that the product will be consumed by its users in a safe and intended fashion.

SOC: Stopping cybercrime from stopping business

Read our SOC whitepaper to learn about Conscia’s Managed SOC service and its advanced detection capabilities, allowing immediate response to digital attacks.

About the author

David Kasabji

Principal Threat Intelligence Analyst

David Kasabji is a Principal Threat Intelligence Analyst at the Conscia Group. His main responsibility is to deliver actionable intelligence in different formats according to target audiences, ranging from Conscia’s own cyberdefense, all the way to the public media platforms. His work includes collecting, analyzing, and disseminating intelligence, reverse engineering obtained malware samples, crafting TTPs […]

Related